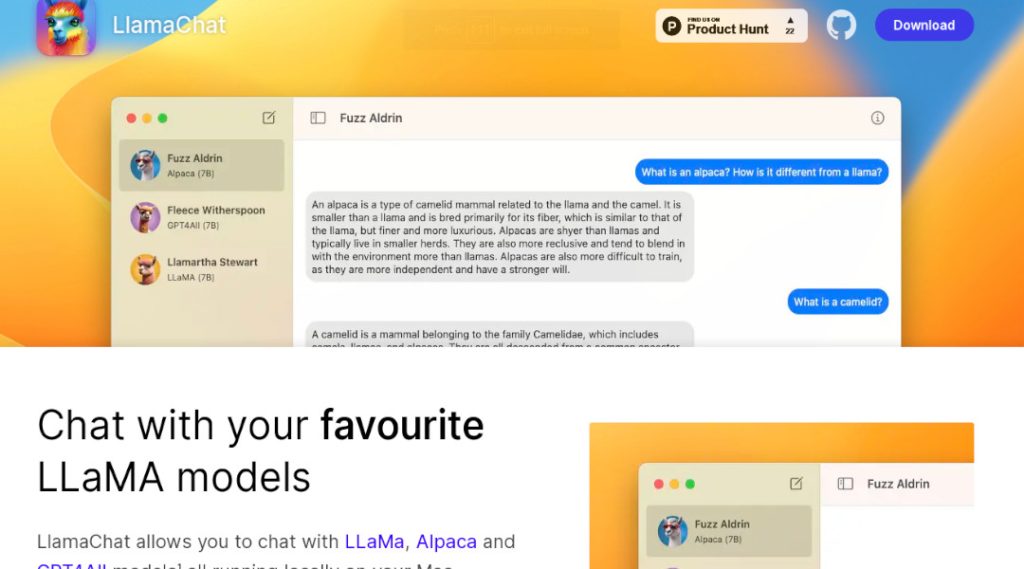

Chat with LLaMA models

Contact Information

Detailed Information

You can communicate with LLaMa, Alpaca, and GPT4All models1 running locally on your Mac using LlamaChat.

Simple model conversion

LlamaChat can import your previously converted.ggml model files or the raw published PyTorch model checkpoints directly.

LlamaChat is entirely open-source and runs on open-source libraries like llama.cpp and llama.swift.

LlamaChat is and always will be entirely free and open-source.