Shap-E : Generating Conditional 3D Implicit Functions

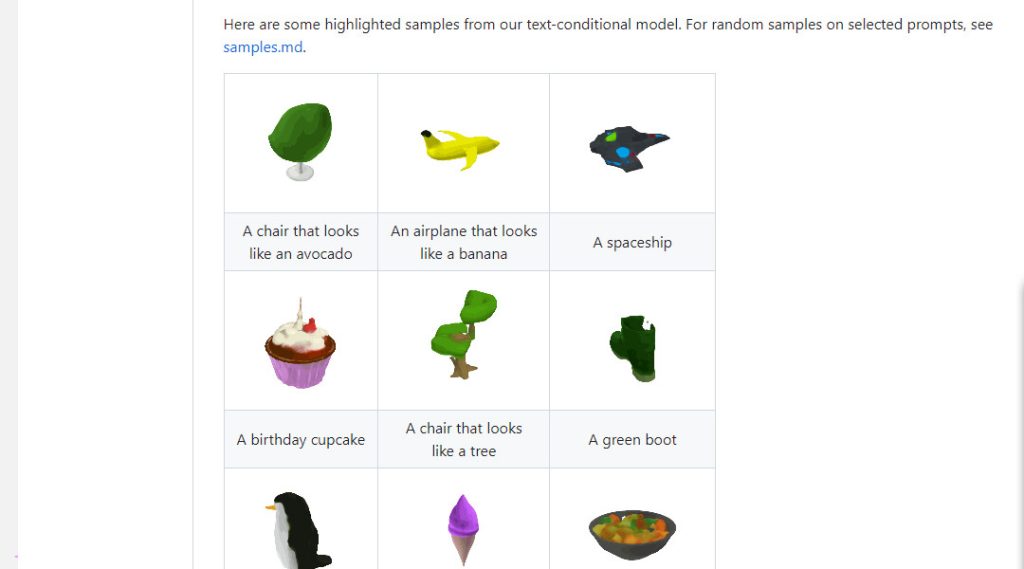

Shap-E is a 3D asset conditional generative model. Shap-E directly creates the parameters of implicit functions that may be represented as both textured meshes and neural radiance fields, in contrast to current work on 3D generative models that only yield a single output representation. Shap-E is trained in two stages: first, an encoder that deterministically maps 3D assets into the parameters of an implicit function is trained; next, using the encoder’s outputs as inputs, a conditional diffusion model is trained. Our models can create sophisticated and varied 3D assets in a couple of seconds after being trained on a sizable dataset of linked 3D and text data. In contrast to Point-E, a generative explicit model over point clouds.

Despite modelling a higher-dimensional, multi-representation output space, Shap-E converges more quickly and achieves equivalent or superior sample quality. We publish the inference code, model weights, and samples.